Got an idea? A vision?

We want to hear it.

Let’s Chat & Make It Happen…or email us at hello@bluewhaleapps.com and we’ll follow up promptly!

Working with a customer in the Health Care Industry and being a data aware company, we realized how much information a person’s audio file can contain. Working with audio files is not new, but only a handful of people really understand the hidden data that an audio can carry and see the potential of the same. Our partners in Health Care understood the value of information and relied on us to help them collect and extract meaning out of such data in a way that can benefit Health Care industry in helping individuals.

The problem at hand was to get an insight into the emotion of a person using audio samples collected during various conversations.

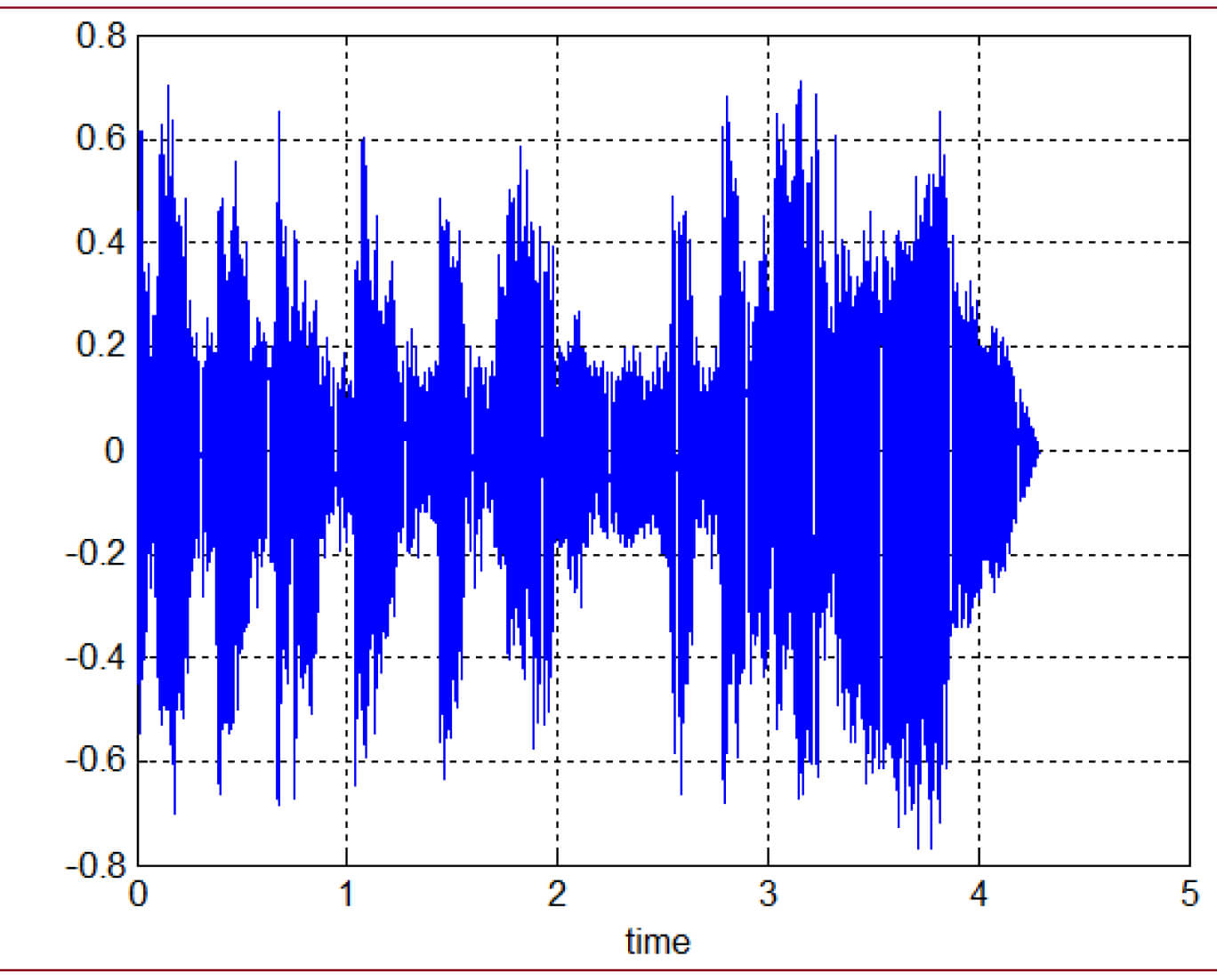

After comparing various audio files, we moved on to collecting the variations in audio files in terms of actual numbers from spectrograms. At the end of this process of feature collection, we had various different properties for each of our audio samples that included contrast and frequency coefficients among others.

While for researching and understanding audio data our self created data was good enough, it did not meet the criteria for the purpose of training our models. For one, the number of samples collected and used so far was way less than what will be needed for training purpose. Second and very important point was that the data used until now did not cover the wide variations that human speech can have. This means our model could end up being heavily skewed if not trained with wider variations.

So while a part of our team was busy developing models based on the knowledge and information gathering accomplished in previous steps, the other part of the our team got busy looking into various datasets. Fortunately for us, our team was able to get two very good datasets that met all our criteria for our needs.

We used the most common library used to split our dataset into training and test set and used 80-20 ratio for the same. Each sample in the dataset was labeled and formatted into an X,Y format, where X denoted the features and Y the label of the sample. While the labels were creates as strings like “happy”, “sad”, “angry” and so on, they were eventually encoded using standard libraries.

Model building is the most crucial phase of any Machine Learning or Deep Learning project. And this project was no different in that aspect. We spent a majority of our time, efforts and resources in this phase.

We started with some of the standard machine learning algorithms. While we tried various algorithms, SVC and Random Forest were the most noteworthy ones that came out on top in terms of training and testing accuracy based on model evaluation. We were however not satisfied with the accuracy numbers, even though the numbers were quite promising. Giving the field of application for this model, we wanted the accuracy to be much better than what we had achieved so far.

Even while the first round of our model building, scoring and evaluation was underway, our team started looking into Deep Learning algorithms. While these algorithms promise great accuracies when used appropriately, these are definitely more complex and need a lot of understanding of how these algorithms work behind the scenes. While the details of the final model that was chosen cannot be revealed, we have made it quite evident that one of the Deep Learning Algorithms came out as a clear winner.

Model building really went hand-in-hand with training and testing. The pickled model objects created by our developers were used by other members in the team tasked with Training, Scoring and Evaluation of those models. The scoring and testing finally decided on where to place a model when compared to other models developed our team. This gave input to developers to keep on trying to achieve better scores and finally build a model that satisfied the goals put forth by our team and customers.

The development phase of this project is over now and we are ready of how we are helping Health Care researchers and providers are using this as an essential tool to help those in need.